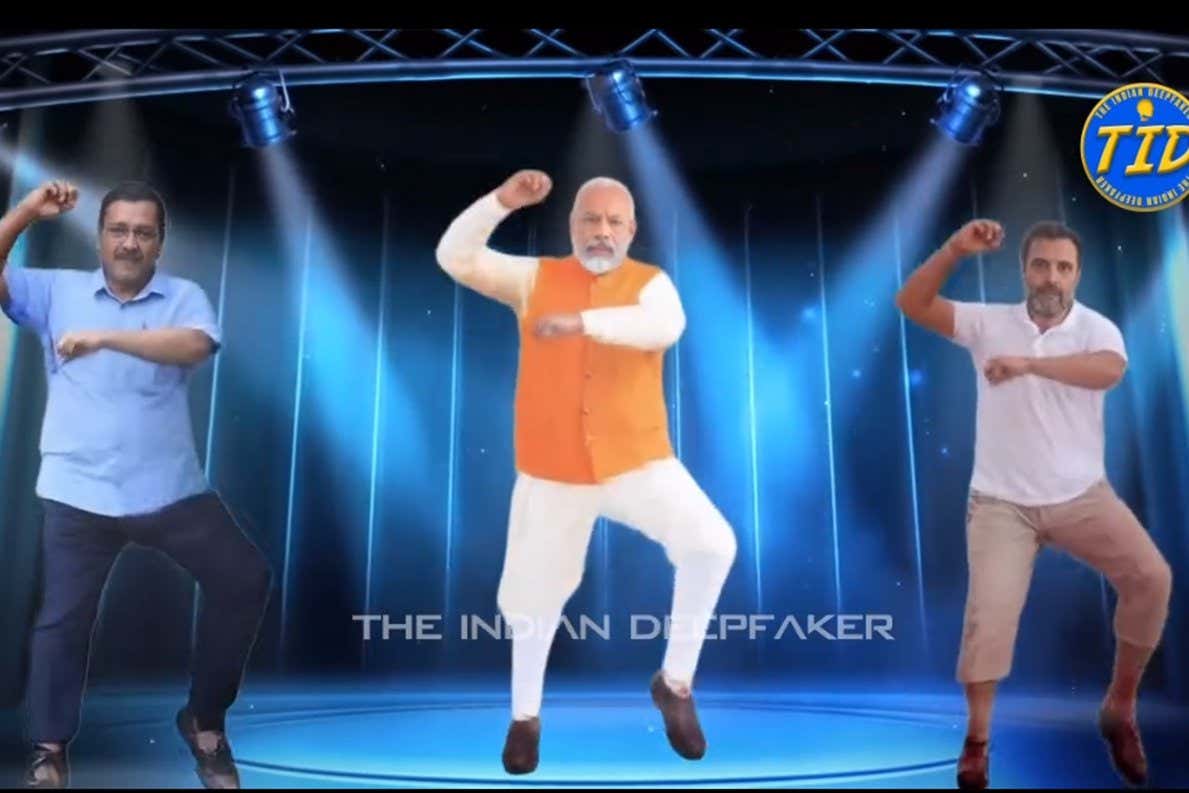

An AI-generated version of India’s prime minister, Narendra Modi, dancing to the song Gangnam Style

@the_indian_deepfaker

Artificial intelligence is enabling India’s politicians to be everywhere at once in the world’s largest election by cloning their voices and digital likenesses. Even dead public figures, such as politician and actress Jayaram Jayalalithaa, are getting digitally resurrected to canvass support in what is shaping up to be the biggest test yet of democratic elections in the age of AI-generated deepfakes.

India’s nearly 970 million eligible voters started going to the polls on 19 April in a multi-phase process lasting until 1 June that will select the next government and prime minister. It has meant booming business for Divyendra Singh Jadoun, whose company The Indian Deepfaker typically uses AI techniques to create special effects for ad campaigns and Netflix productions.

Advertisement

His firm is handling more than a dozen election-related projects, including creating holographic avatars of politicians, using audio cloning and video deepfakes to enable personalised messaging en masse, and deploying a conversational AI agent that identifies itself as AI, but speaks in the voice of a political candidate during calls with voters.

“For the first time, it’s going to be happening on a large scale,” says Jadoun. “There are some political parties that want to try out everything, and even we don’t know what impact it will have.”

Much has changed since India’s current prime minister, Narendra Modi, used 3D hologram technology to broadcast prerecorded speeches at multiple campaign rallies around India in 2014. Now, his AI-generated avatar speaks to voters by name in WhatsApp videos as the use of AI technology in Indian politics has ballooned.

The AI-generated content tends to present campaigners positively, rather than being used for attacks on the opposition, says Joyojeet Pal at the University of Michigan in Ann Arbor. The messages may well be believed by audiences who are unfamiliar with cutting-edge AI content, while also creating memes for voters who realise AI is behind them, but enjoy and share them because they align with their political beliefs, he says.

But it isn’t all so squeaky clean. The World Economic Forum’s 2024 Global Risks Report found that Indian experts flagged misinformation and disinformation as the “biggest threat” for their country in the next two years, warning of how inaccurate AI-generated videos could influence voters and fuel protests. In 2018, fake news messages and videos spread through WhatsApp spurred mobs to lynch dozens of people in India.

Jadoun says his company immediately turned down about half of the 200 or so election-related requests it has received as they were “unethical”, such as creating false deepfake videos intended to harm the images of political figures.

But he points out that anyone can make lower-quality deepfakes within minutes using online tools. Policing them is nigh-on impossible.

“In India, the main difference is that the resources expended by policy-makers and companies to examine and deal with these challenges are completely dwarfed by their scale and intensity,” says Divij Joshi at University College London. “The political context is also one where parties encourage extreme speech and hate speech.”

Much will depend on how US tech companies deal with deepfakes on platforms such as Facebook, Instagram, WhatsApp, YouTube and Telegram during India’s election. Meta, the owner of Facebook, has teamed up with a third-party fact-checking network to evaluate possible misinformation in 16 Indian languages and English and plans to label AI-generated content more broadly on its platforms starting in May this year.

Meta says it has also launched a fact-checking helpline on WhatsApp to flag deepfakes and other AI-generated misinformation, in coordination with a new Deepfakes Analysis Unit established by the Misinformation Combat Alliance in India. The tip line team will let WhatsApp users know whether a submitted audio or video sample is AI-manipulated and will forward any potential misinformation to fact-checking partners, says Pamposh Raina, head of the Deepfakes Analysis Unit.

But the bigger challenge is whether the Election Commission of India – which is working with tech companies to address misinformation and disinformation – can manage to ensure that the election remains fair, says Pal. Part of its role is to prohibit any discrimination or incitement based on religion or castes, and to prohibit impersonation.

This article is part of a special series on India’s election.

Topics: