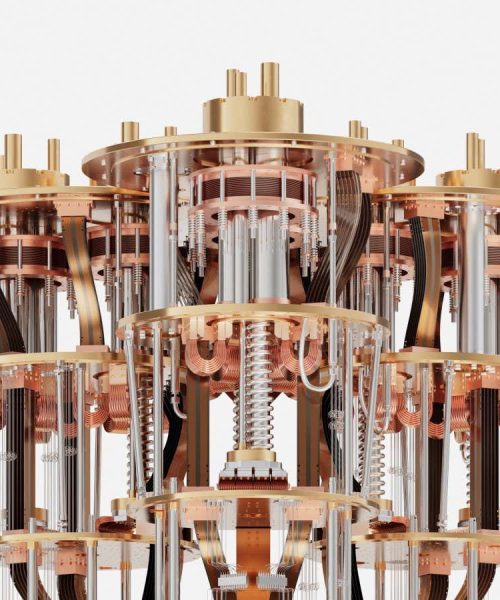

There are many ways for computers to store numbers

Andrew Ostrovsky/Panther Media GmbH/Alamy

Changing the way numbers are stored in computers could improve the accuracy of calculations without needing to increase energy consumption or computing power, which could prove useful for software that needs to quickly switch between very large and small numbers.

Numbers can be surprisingly difficult for computers to work with. The simplest are integers – a whole number with no decimal point or fraction. As integers grow larger, they require more storage space, which can lead to problems when we attempt to reduce those requirements – the infamous millennium…