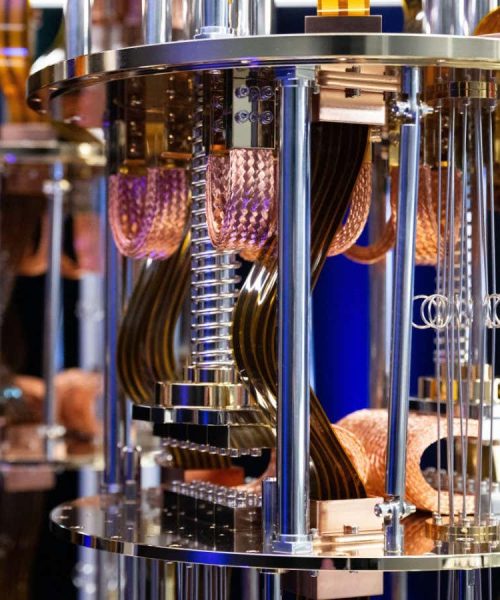

The Hala Point neuromorphic computer is powered by Intel’s Loihi 2 chips

Intel Corporation

Intel has created the world’s largest neuromorphic computer, a device intended to mimic the operation of the human brain. The firm hopes that it will be able to run more sophisticated AI models than is possible on conventional computers, but experts say there are engineering hurdles to overcome before the device can compete with the state of the art, let alone exceed it.

Expectations for neuromorphic computers are high because they are inherently different to traditional machines. While a regular computer uses its processor to carry out operations and stores data in separate memory, a neuromorphic device uses artificial neurons to both store and compute, just as our brains do. This removes the need to shuttle data back and forth between components, which can be a bottleneck for current computers.

Advertisement

This architecture could bring far greater energy efficiency, with Intel claiming its new Hala Point neuromorphic computer uses 100 times less energy than conventional machines when running optimisation problems, which involve finding the best solution to a problem given certain constraints. It could also unlock new ways to train and run AI models that use chains of neurons, as real brains process information, rather than mechanically passing an input through each and every layer of artificial neurons, as current models do.

Hala Point contains 1.15 billion artificial neurons across 1152 Loihi 2 Chips, and is capable of 380 trillion synaptic operations per second. Mike Davies at Intel says that despite this power it occupies just six racks in a standard server case – a space similar to that of a microwave oven. Larger machines will be possible, says Davies. “We built this scale of system because, honestly, a billion neurons was a nice round number,” he says. “I mean, there wasn’t any particular technical engineering challenge that made us stop at this level.”

No other existing machine comes close to the scale of Hala Point, although DeepSouth, a neuromorphic computer due to be completed later this year, will be capable of a claimed 228 trillion synaptic operations per second.

The Loihi 2 chips are still prototypes made in small numbers by Intel, but Davies says the real bottleneck actually lies in the layers of software needed to take real-world problems, convert them into a format that can run on a neuromorphic computer and carry out processing. This process is, like neuromorphic computing in general, still in its infancy. “The software has been such a limiting factor,” says Davies, meaning there is little point building a larger machine yet.

Intel suggests that a machine like Hala Point could create AI models that learn continuously, rather than needing to be trained from scratch to learn each new task, as is the case with current models. But James Knight at the University of Sussex, UK, dismisses this as “hype”.

Knight points out that current models like ChatGPT are trained using graphics cards operating in parallel, meaning that many chips can be put to work on training the same model. But because neuromorphic computers work with a single input and cannot be trained in parallel, it is likely to take decades to even initially train something like ChatGPT on such hardware, let alone devise ways to make it continually learn once in operation, he says.

Davies says that while today’s neuromorphic hardware is not suited for training large AI models from scratch, he hopes that one day they could take pre-trained models and enable them to learn new tasks over time. “Although the methods are still in research, this is the kind of continual learning problem that we believe large-scale neuromorphic systems like Hala Point can, in the future, solve in a highly efficient manner,” he says.

Knight is optimistic that neuromorphic computers could provide a boost for many other computer science problems, while also increasing efficiency – once the tools needed for developers to write software for these problems to run on the unique hardware become more mature.

They could also offer a better path to approaching human-level intelligence, otherwise known as artificial general intelligence (AGI), which many AI experts think won’t be possible with the large language models that power the likes of ChatGPT. “I think that’s becoming an increasingly less controversial opinion,” says Knight. “The dream is that one day neuromorphic computing will enable us to make brain-like models.”

Topics: